James Serra aims to clear up some confusion:

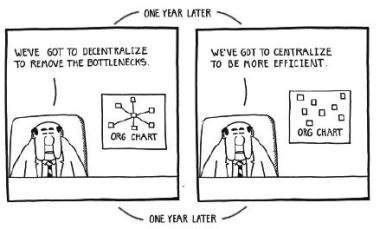

I have done a ton of research lately on Data Mesh (see the excellent Building a successful Data Mesh – More than just a technology initiative for more details), and have some concerns about the paradigm shift it requires. My last blog tackled the one about Centralized vs decentralized data architecture. In this one I want to talk about centralized ownership vs decentralized ownership, along with another paradigm shift (or core principle) closely related to it, siloed data engineering teams vs cross-functional data domain teams.

First I wanted to mention there is a Data Mesh Learning slack channel that I have spent a lot of time reading and what is apparent is there is a lot of confusion on exactly what a data mesh is and how to build it. I see this as a major problem as the more difficult it is to explain a concept the more difficult it will be for companies to successfully build that concept, so the promise of a data mesh improving the failure rates for big data projects will be difficult to achieve if we can’t all agree exactly what a data mesh is. What’s more is the core principles of the data mesh sound great in theory but will have challenges in implementing them, hence my thoughts in this blog on centralized ownership vs decentralized ownership.

Read on for James’s take on the matter.

Comments closed