James Serra looks at a pattern:

A centralized data architecture means the data from each domain/subject (i.e. payroll, operations, finance) is copied to one location (i.e. a data lake under one storage account), and that the data from the multiple domains/subjects are combined to create centralized data models and unified views. It also means centralized ownership of the data (usually IT). This is the approach used by a Data Fabric.

A decentralized distributed data architecture means the data from each domain is not copied but rather kept within the domain (each domain/subject has its own data lake under one storage account) and each domain has its own data models. It also means distributed ownership of the data, with each domain having its own owner.

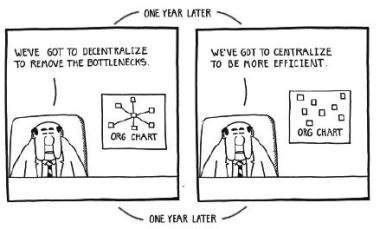

So is decentralized better than centralized?

Read on for James’s answer, and allow me to include a Dilbert cartoon so old, the boss didn’t even have pointy hair yet.